Deploying Applications with Ease: A Guide to Containerization

29 November 2024

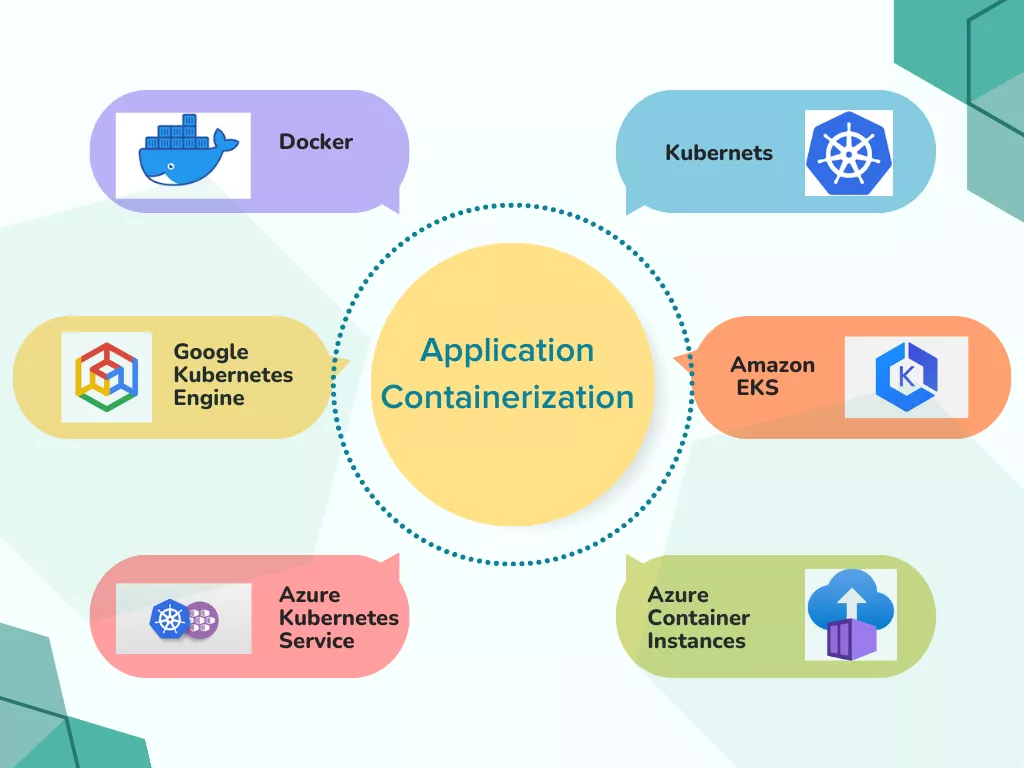

What are Containers?

Applications that run in isolated runtime environments are called containerised applications. Containers encapsulate an application with all its dependencies, including system libraries, binaries, and configuration files.